Kubernetes cluster on AWS – éles üzem

A blogcikk angol nyelven elérhető.

PRODUCTION GRADE K8S CLUSTER DEPLOYMENT ON AWS CLOUD

These AWS CloudFormation templates and scripts set up a flexible, secure, fault-tolerant Kubernetes cluster in AWS private VPC environment automatically, into a configuration of your choice. The project main purposes are: simple, painless, script-less, easy Kubernetes environment deployment in 1 step.

We provide two deployment versions with the same underlying AWS VPC toplogy:

- full scale: fault tolerant, production grade architecture (multi master, multi node, NAT gateways),

- small footprint: single master, single NAT instance, single node deployment (for testing, demo, first steps)

The Kubernetes Operations („kops”) project and AWS CloudFormation (CFN) templates togedther with bootstrap scripts, help to automate the whole process. The final result is a 100% Kubernetes cluster, with 100% Kops compatibility, what you can manage from either the Bastion host, via OpenVPN or using HTTPS API through AWS ELB endpoint.

The project keeps focus on security, transparency and simplicity. This guide is mainly created for developers, IT architects, administrators, and DevOps professionals who are planning to implement their Kubernetes workloads on AWS.

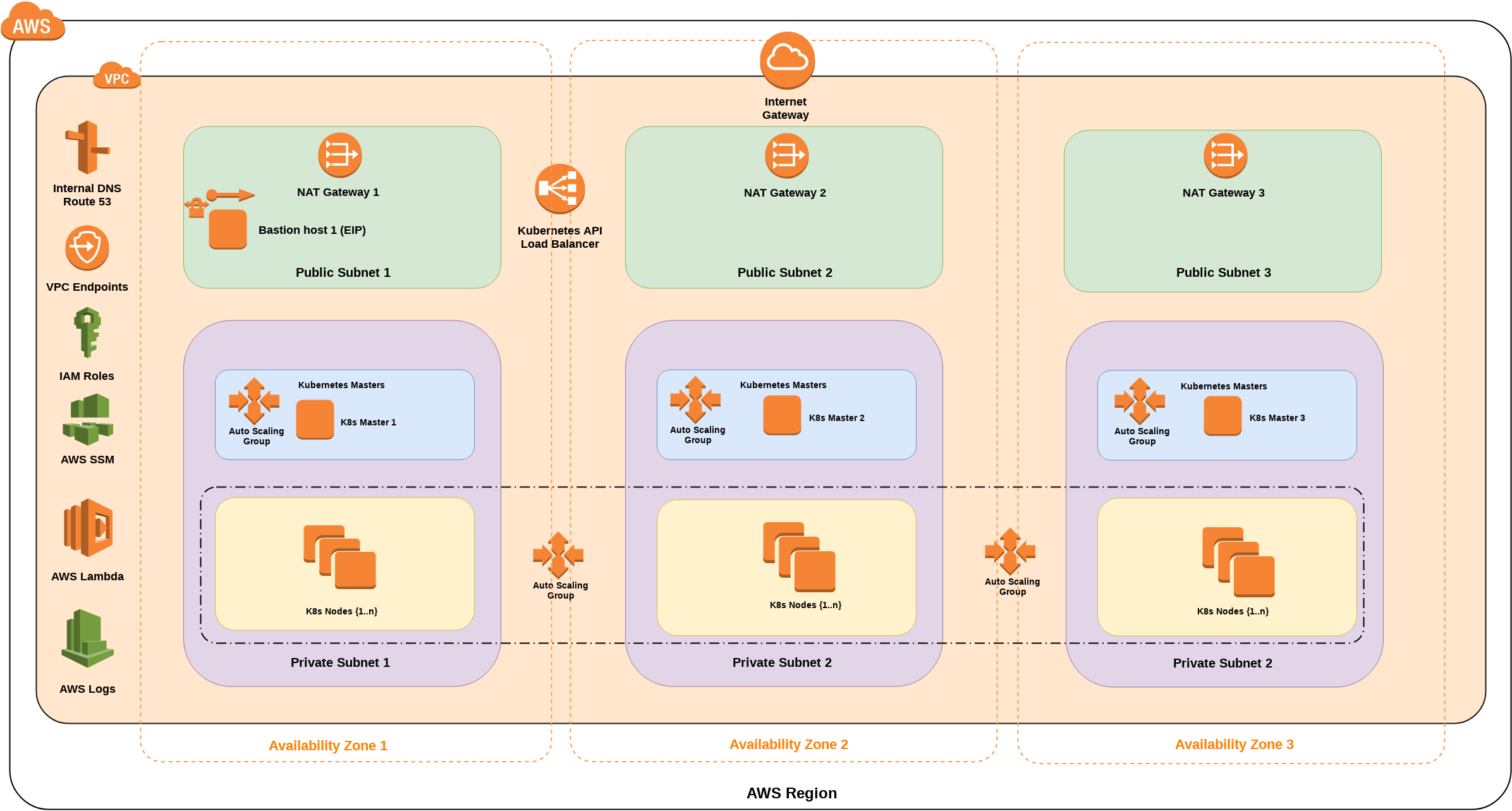

FULL-SCALE ARCHITECTURE

RESOURCES DEPLOYED

- one VPC: 3 private and 3 public subnets in 3 different Availability Zones, Gateway type Private Link routes to S3 and DynamoDB (free),

- three NAT gateways in each public subnet in each 3 Availability Zones,

- three self-healing Kubernetes Master instances in each Availability Zone’s private subnet, in AutoScaling groups (separate ASGs),

- three Node instances in one AutoScaling group, expended over all Availability Zones,

- one self-healing bastion host in 1 Availability Zone’s public subnet,

- four Elastic IP Addresses: 3 for NAT Gateways, 1 for Bastion host,

- one internal (or public: optional) ELB load balancer for HTTPS access to the Kubernetes API,

- two CloudWatch Logs group for bastion host and Kubernetes Docker pods (optional),

- one Lambda function for graceful teardown with AWS SSM,

- two security groups: 1 for bastion host, 1 for Kubernetes Hosts (Master and Nodes),

- IAM roles for bastion hosts, K8s Nodes and Master hosts,

- one S3 bucket for kops state store,

- one Route53 private zone for VPC (optional)

SMALL FOOTPRINT ARCHITECTURE

RESOURCES DEPLOYED

- one VPC: 3 private and 3 public subnets in 3 different Availability Zones, Gateway type Private Link routes to S3 and DynamoDB (free),

- one self-healing Kubernetes Master instance in one Availability Zone’s private subnet,

- one Node instance in AutoScaling groups, expended over all Availability Zones,

- one self-healing bastion host in 1 Availability Zone’s public subnet,

- bastion host is the NAT instance router for private subnets,

- four Elastic IP Addresses: 3 for NAT Gateways, 1 for Bastion host,

- one internal (or public: optional) ELB load balancer for HTTPS access to the Kubernetes API,

- two CloudWatch Logs group for bastion host and Kubernetes Docker pods (optional),

- one Lambda function for graceful teardown with AWS SSM,

- two security groups: 1 for bastion host, 1 for Kubernetes Hosts (Master and Nodes)

- IAM roles for bastion hosts, K8s Nodes and Master hosts

- one S3 bucket for kops state store,

- one Route53 private zone for VPC (optional)

LOGS

Optional: If you choose in template options, all container logs are sent to AWS CloudWatch Logs. In that case, local „kubectl” logs aren’t available internally via API call (e.g. kubectl logs … command: „Error response from daemon: configured logging driver does not support reading”) Please check the AWS CloudWatch / Logs / K8s* for container logs.

ABSTRACT PAPER

Have a look at this abstract paper for the high level details of this solution.

REFERENCES

- Kubernetes Open-Source Documentation: https://kubernetes.io/docs/

- Calico Networking: http://docs.projectcalico.org/

- KOPS documentation: https://github.com/kubernetes/kops/blob/master/docs/aws.md ,https://github.com/kubernetes/kops/tree/master/docs

- Kubernetes Host OS versions: https://github.com/kubernetes/kops/blob/master/docs/images.md

- OpenVPN: https://github.com/tatobi/easy-openvpn

- Heptio Kubernetes Quick Start guide: https://aws.amazon.com/quickstart/architecture/heptio-kubernetes/

COSTS AND LICENSES

You are responsible for the cost of the AWS services used while running this deployment. Our project hosted under Apache 2.0 open source license.

You can visit TC2’s GitHub repository to download the templates and scripts for this public release of the deployment guide. Total Cloud Consulting will be updating this guide on a regular basis.